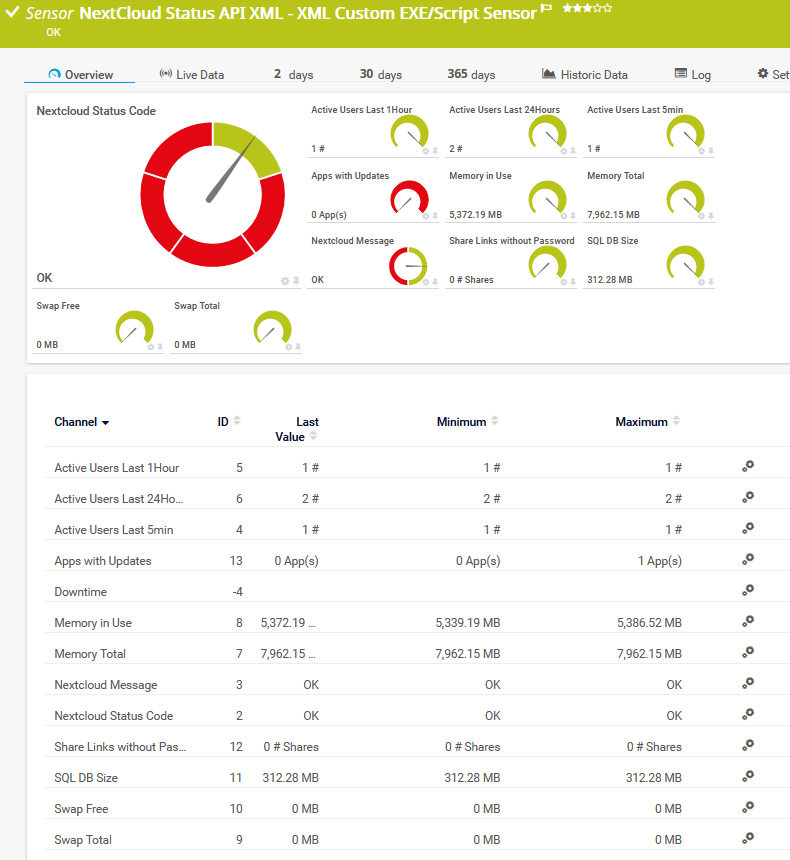

In order to monitor your Nextcloud API (XML) via PRTG, you can use the following steps:

https://github.com/flostyen/PRTGScripts/tree/master/PRTG-NextCloud-Status which is a fork of https://github.com/freaky-media/PRTGScripts/blob/master/PRTG-NextCloud-Status/ (I simply added TLSv1.2 support and adjusted the howto guide, all the work was done from freaky-media 😊)

1. Installation in PRTG

1.1 Copy the PS1 File to your PRTG server in the path C:\Program Files (x86)\PRTG Network Monitor\Custom Sensors\EXEXML.

If you want to monitoring nextcloud systems from your PRTG remote probes, copy the script to the remote probe.

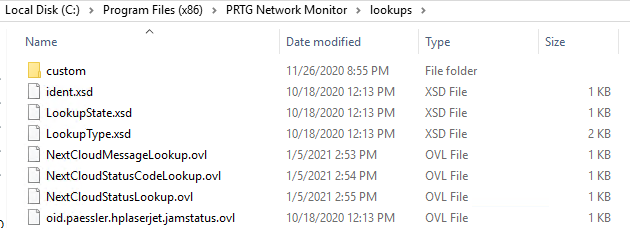

1.2 Create the following lookup files NextCloudMessageLookup.ovl, NextCloudStatusCodeLookup.ovl, NextCloudStatusLookup.ovl

into your PRTG installation folder C:\Program Files (x86)\PRTG Network Monitor\lookups\custom

1.3 Reload Lookups:

PRTG GUI -> Setup -> System Administration -> Administrative Tools -> Load Lookups and File Lists -> Go! Button

2. Configuration in PRTG

2.1 Add the sensor "EXE/Script Advanced"

2.2 Change the sensor name

2.3 Choose in the powershell script PRTG_NextCloud.ps1.

2.4 Add parameter

-NCusername *AnExtraNCAdminUser* -NCpassword *StrongPassSentence* -NCURL *YourNCFQDN*